The optimum probability distribution that minimizes the absolute redundancy g pp p12 n of the source with entropy k p and mean codeword length l is the escort distribution given by 112 i i l i n l i d pi n d 213. The 100 atoms of crystal a continually exchange energy among themselves and transfer from one of these 10 10 44 arrangements to another in rapid succession.

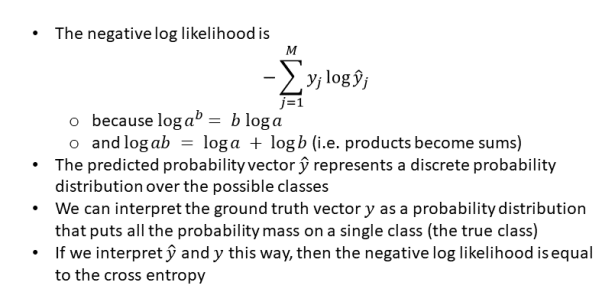

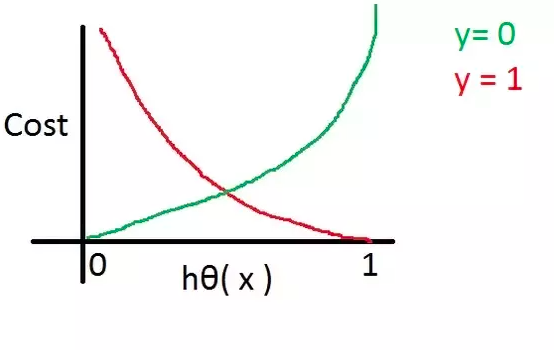

A Gentle Introduction To Cross Entropy For Machine Learning

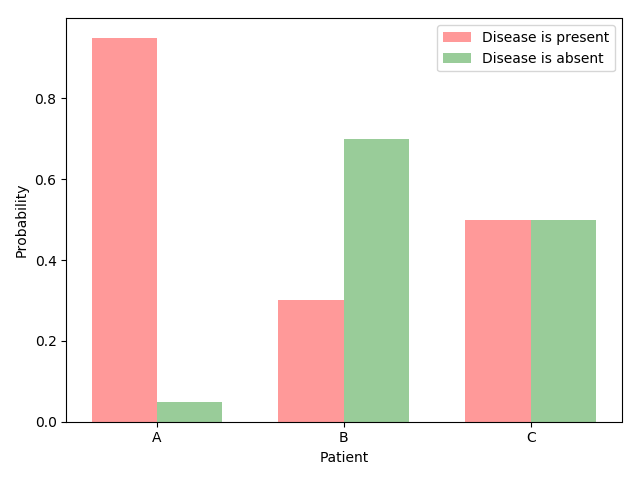

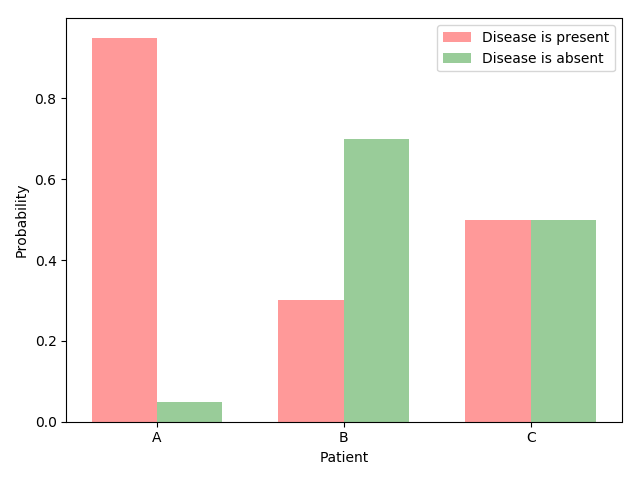

The probability of fine and not fine are both 05.

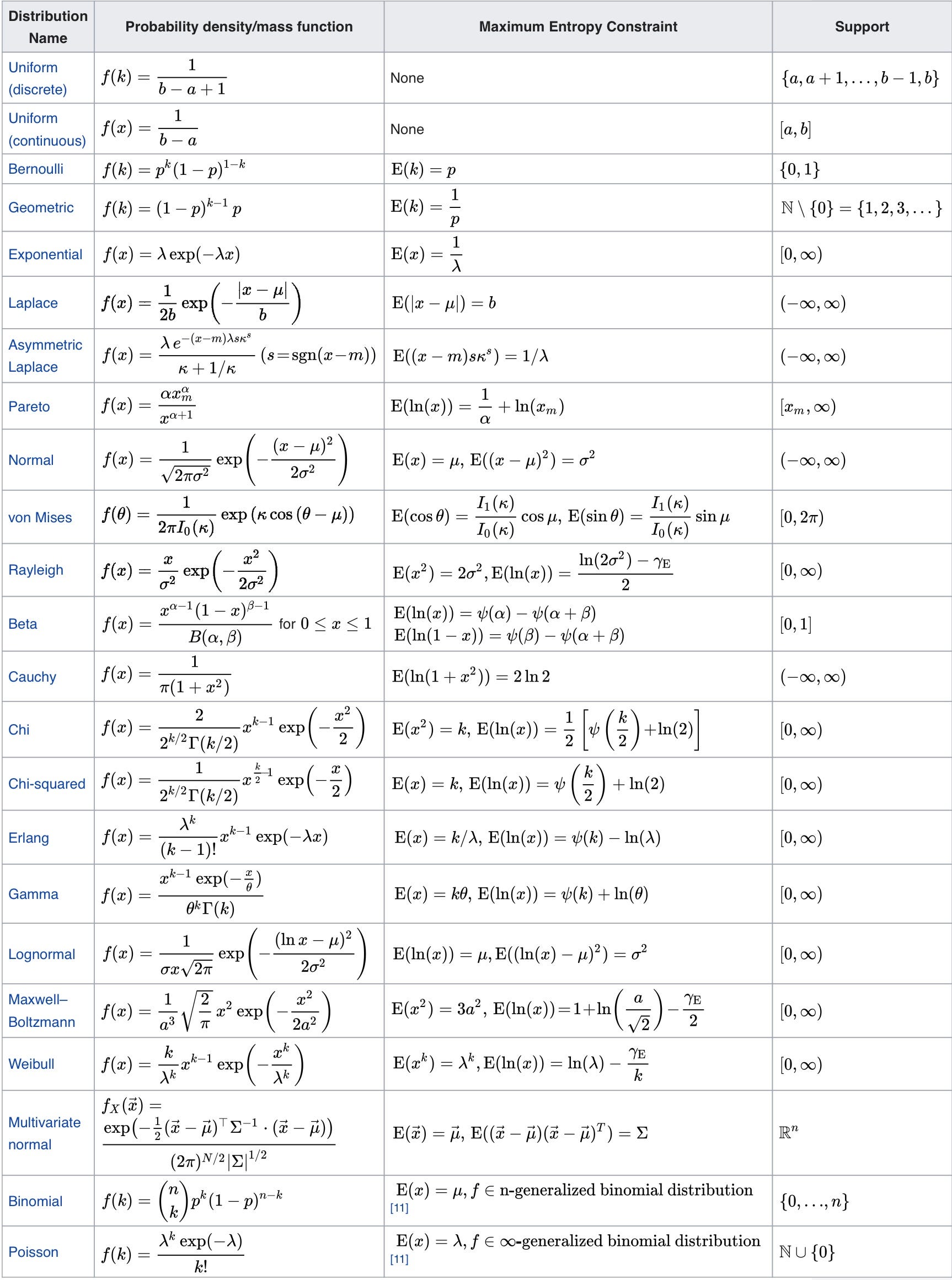

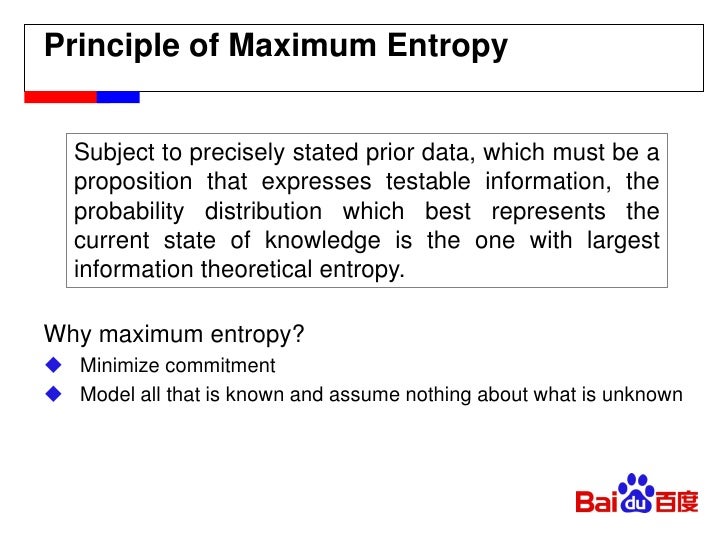

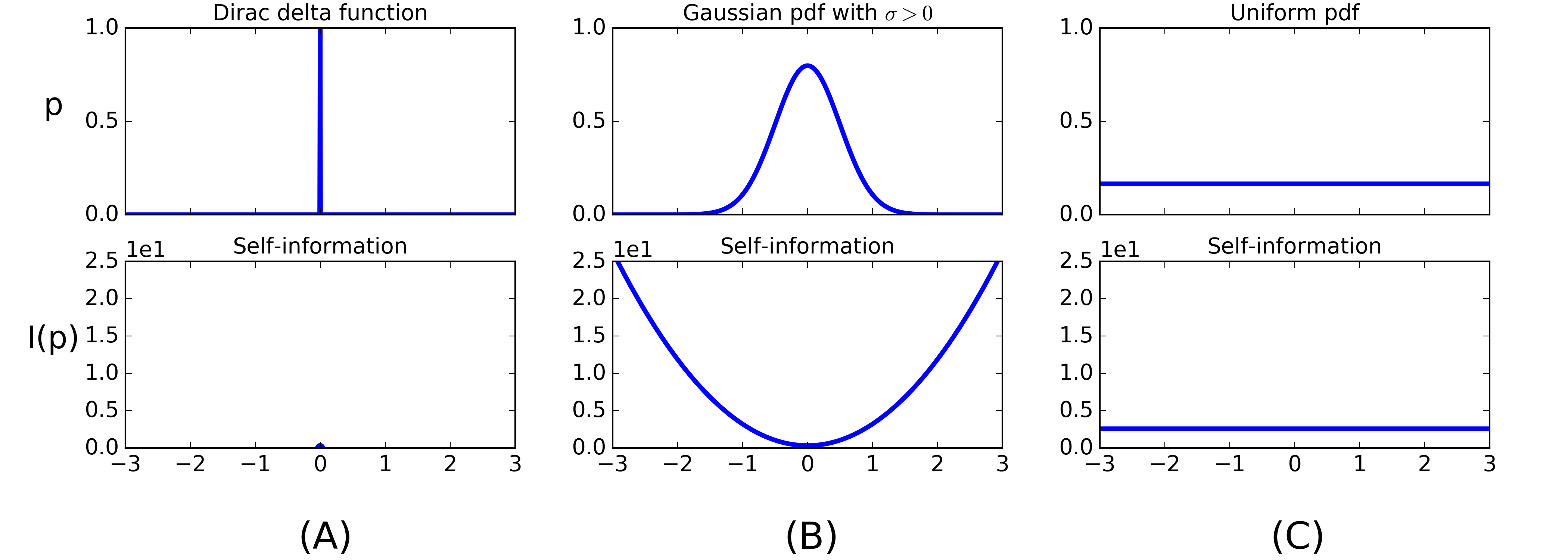

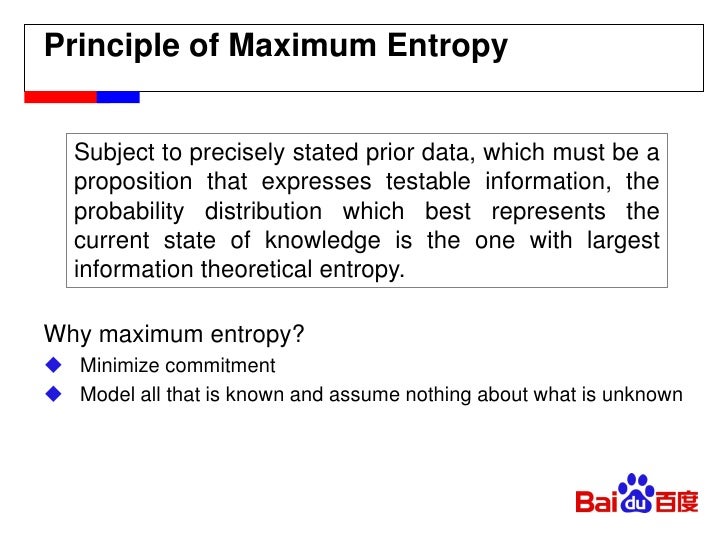

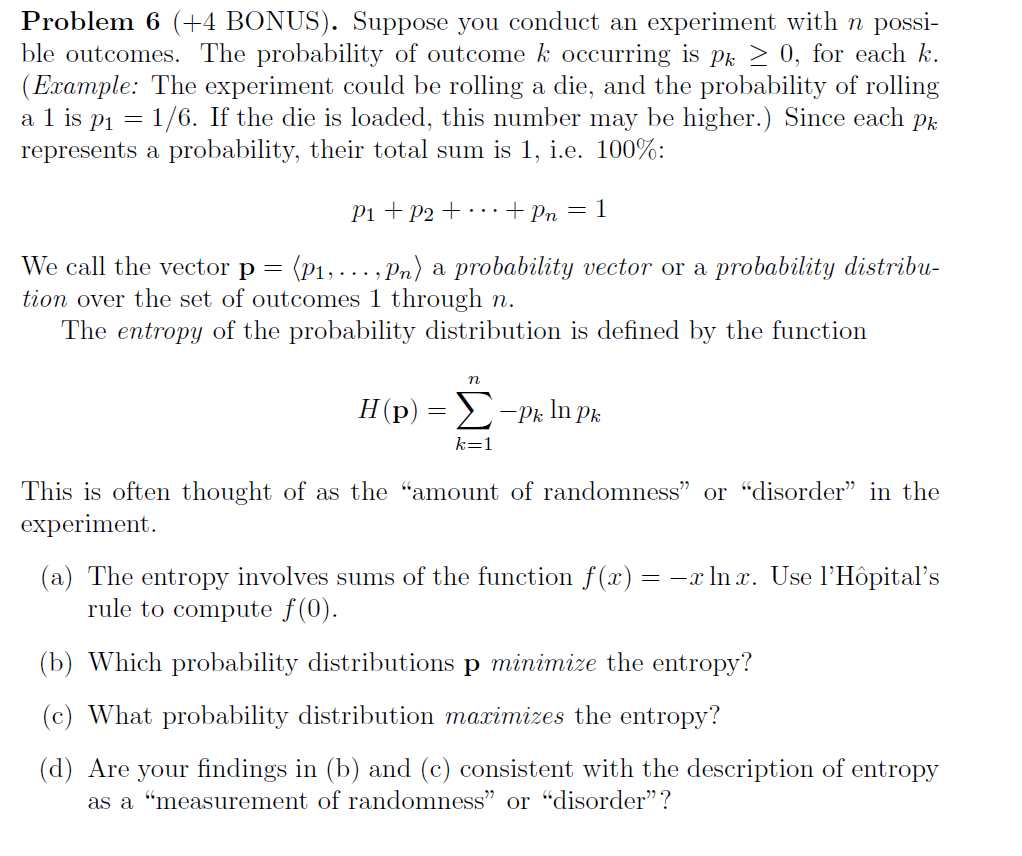

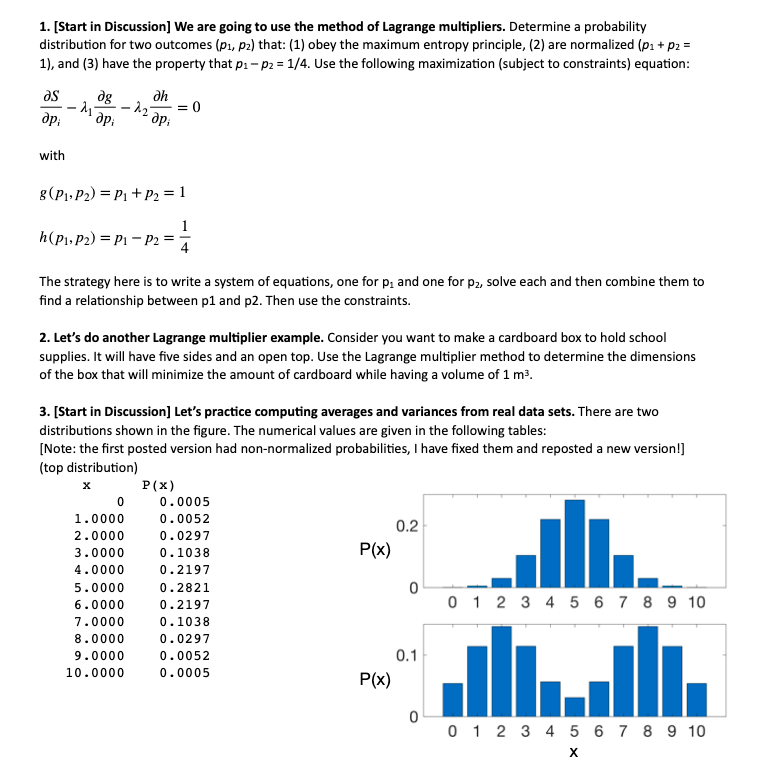

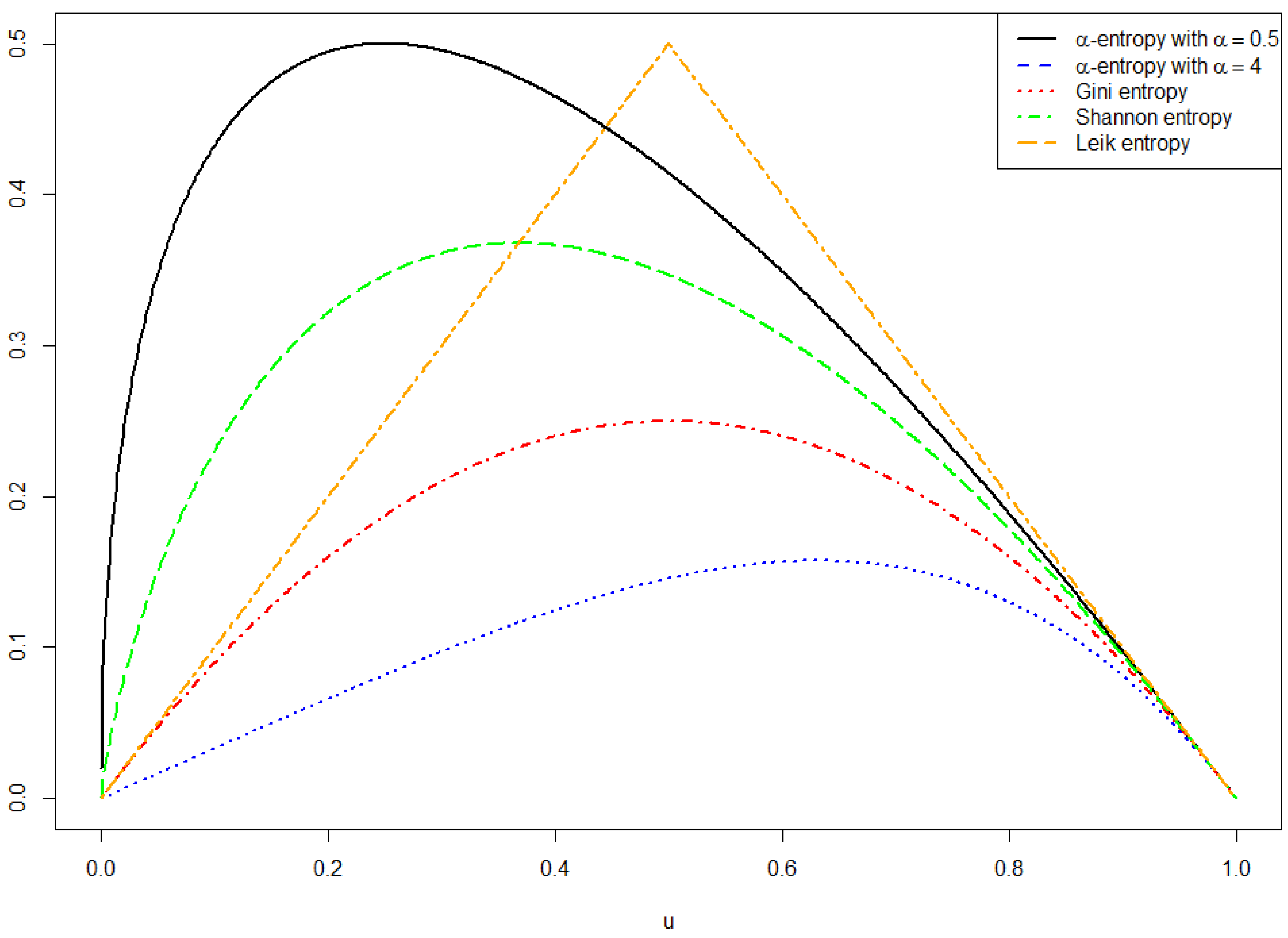

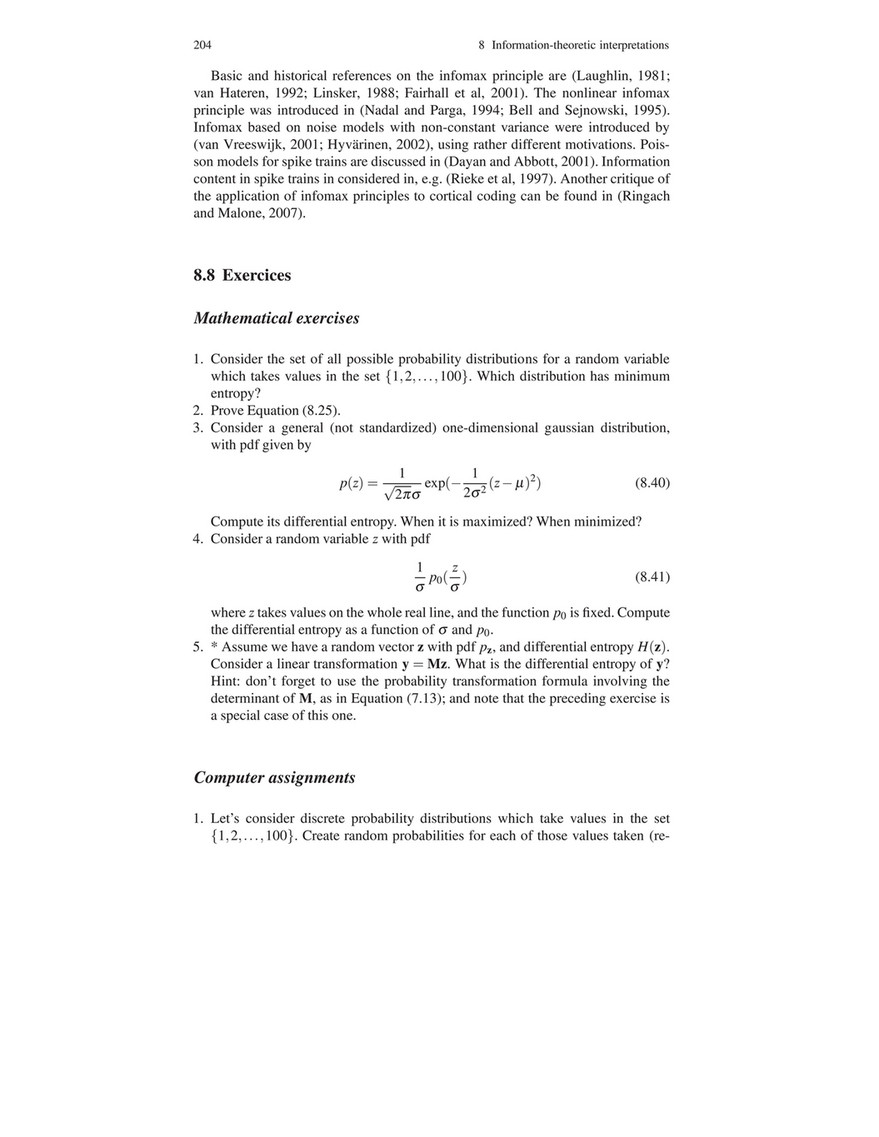

Which probability distribution minimizes entropy. The concept of information entropy was introduced by claude shannon in his 1948 paper a mathematical theory of communication. To maximize entropy we want to minimize the following function. According to the principle of maximum entropy if nothing is known about a distribution except that it belongs to a certain class then the distribution with the largest entropy should be chosen as the least informative default.

Balanced probability distribution surprising. First maximizing entropy minimizes the amoun. Another way of stating this.

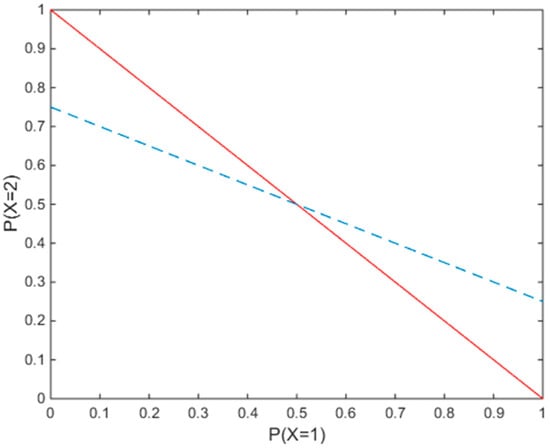

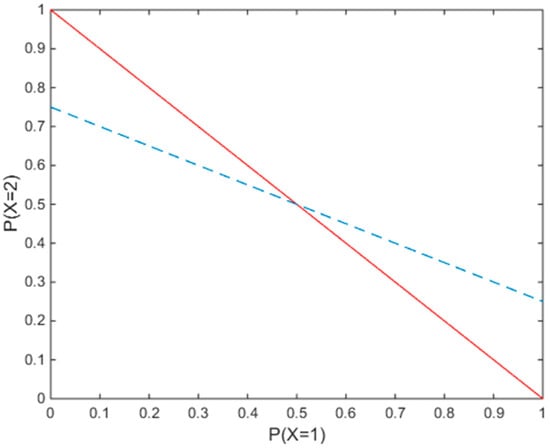

So our model the probability distribution of the weather is the uniform distribution. The below diagram shows the entropy of our probability distribution becomes the maximum at p05. The motivation is twofold.

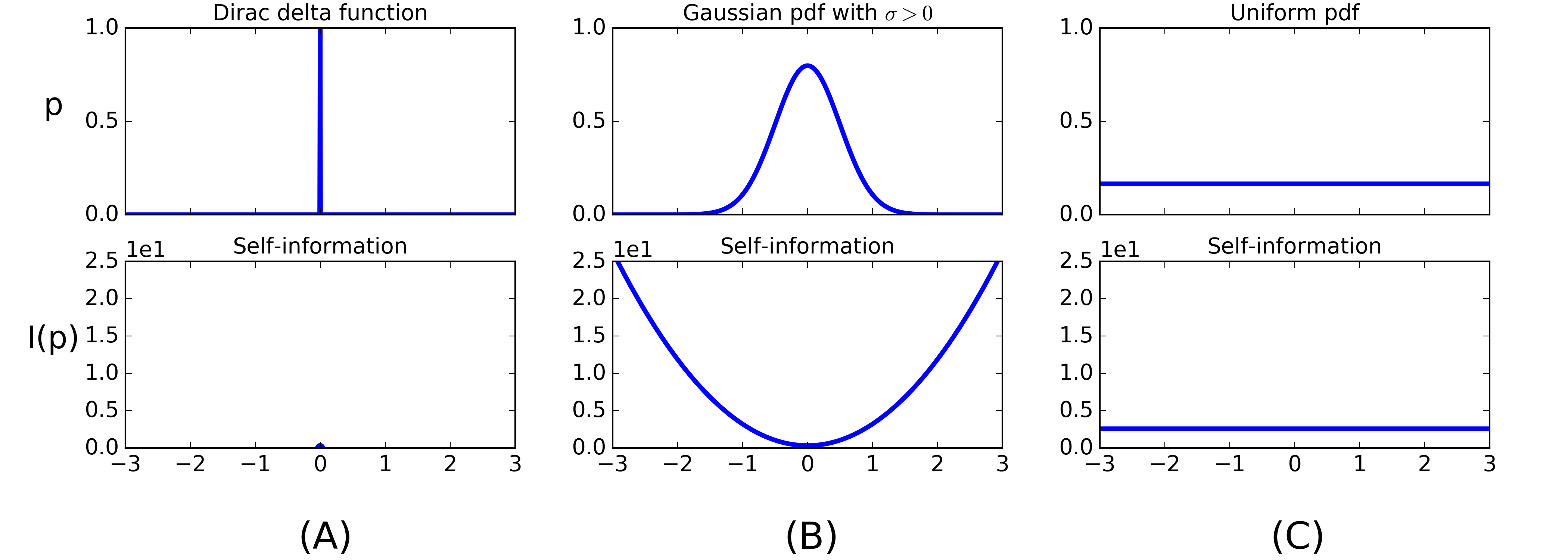

If we transition from skewed to equal probability of events in the distribution we would expect entropy to start low and increase specifically from the lowest entropy of 00 for events with impossibilitycertainty probability of 0 and 1 respectively to the largest entropy of 10 for events with equal probability. Take precisely stated prior data or testable information about a probability distribution function. The principle of maximum entropy states that the probability distribution which best represents the current state of knowledge is the one with largest entropy in the context of precisely stated prior data such as a proposition that expresses testable information.

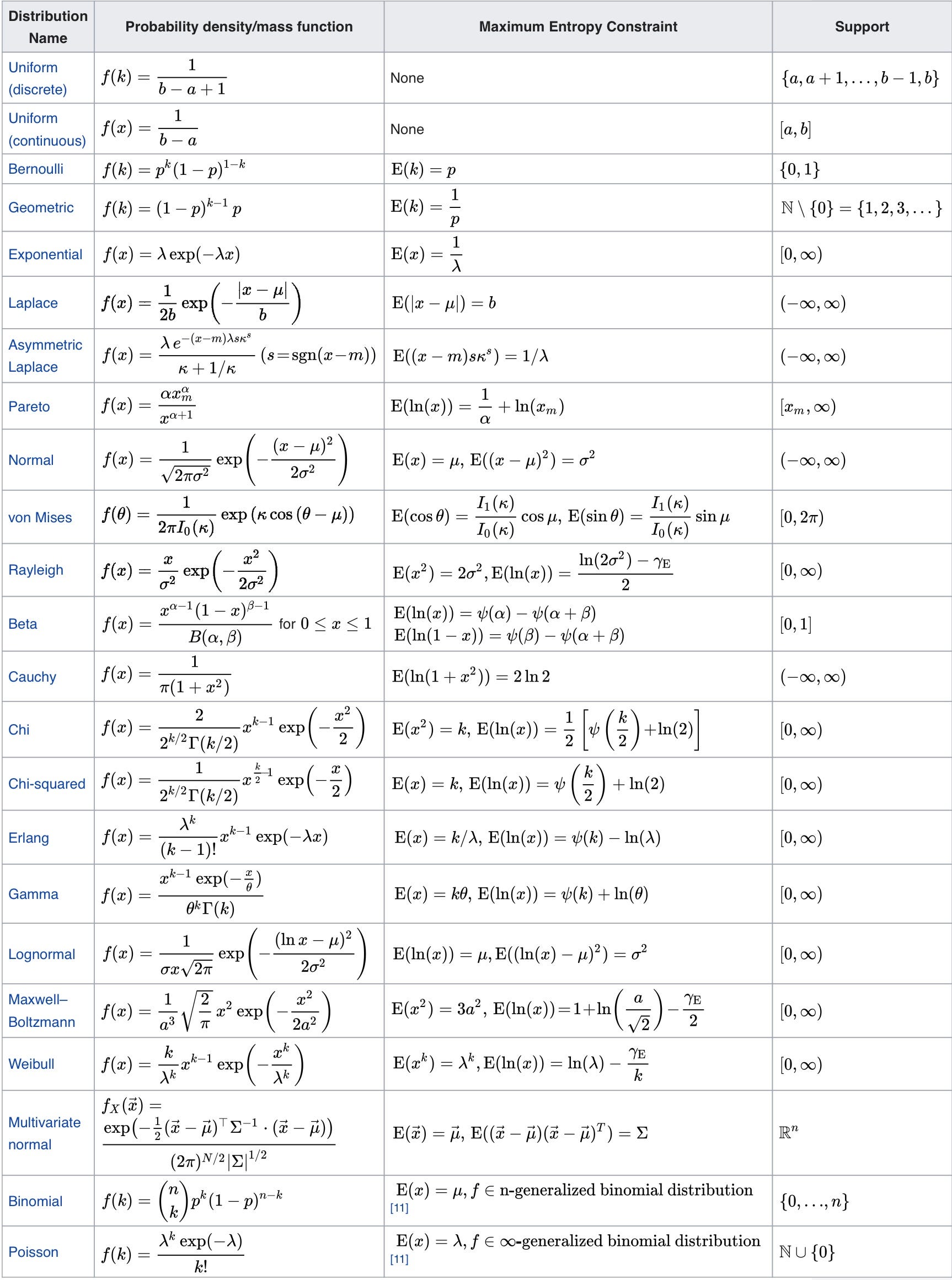

In statistics and information theory a maximum entropy probability distribution has entropy that is at least as great as that of all other members of a specified class of probability distributions. Derivation of maximum entropy probability distribution of half bounded random variable with fixed mean barr exponential distribution now constrain on a fixed mean but no fixed variance which we will see is the exponential distribution. As an example consider a biased coin with probability p of landing on heads and probability 1 p.

As an extreme case imagine one event getting probability of almost one therefore the other events will have a combined probability of almost zero and the entropy will be very low. Thus w 1 initial thermodynamic probability is 10 10 44. Where p is the probability of the i th event occurring.

In information theory the entropy of a random variable is the average level of information surprise or uncertainty inherent in the variables possible outcomes. Therefore the entropy being the expected information content will go down since the event with lower information content will be weighted more. Lets plot the entropy and visually confirm that p05 gives the maximum.

It is clear from figure 1 that entropy is just the negative of the weighted average of the log of the probability of each event in the. Calculations show that there are 10 10 44 alternative ways of making this distribution.

Entropy Is A Measure Of Uncertainty By Sebastian Kwiatkowski Towards Data Science

Entropy Is A Measure Of Uncertainty By Sebastian Kwiatkowski Towards Data Science

Shannon Entropy In The Context Of Machine Learning And Ai By Frank Preiswerk The Startup Medium

When It Is Conditional Entropy Minimized Mathematics Stack Exchange

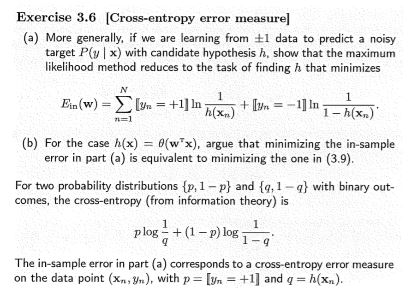

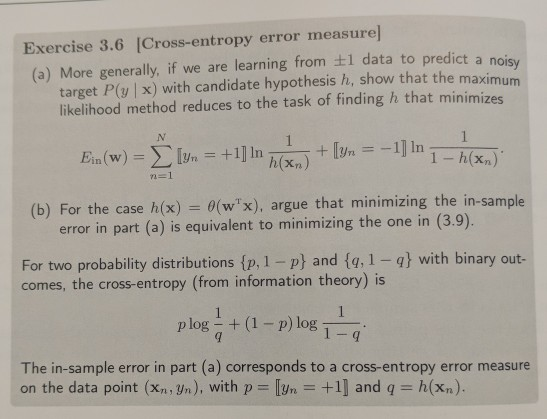

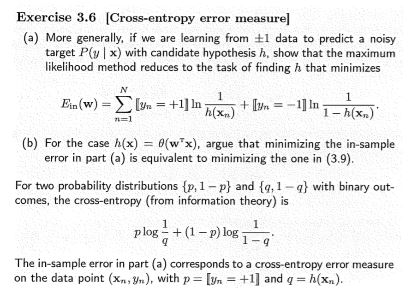

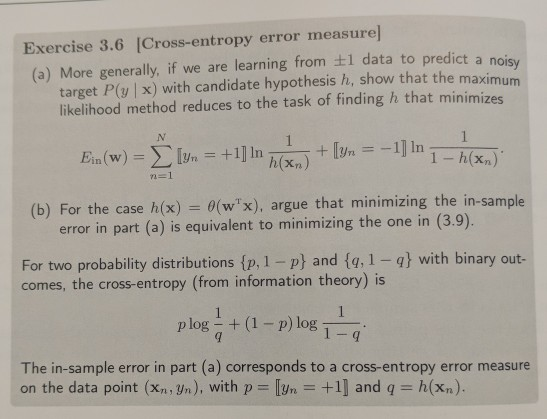

Exercise 3 6 Icross Entropy Error Measure A Mor Chegg Com

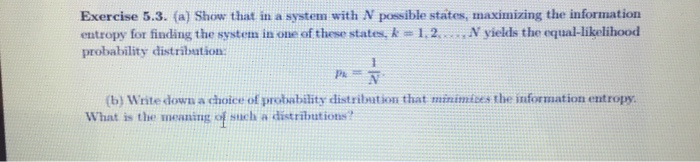

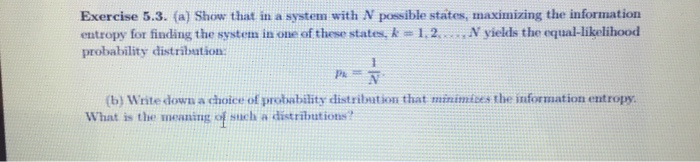

Solved Exercise 5 3 A Show That In A System With N Pos Chegg Com

Normal Distribution Demystified Understanding The Maximum Entropy By Naoki Towards Data Science

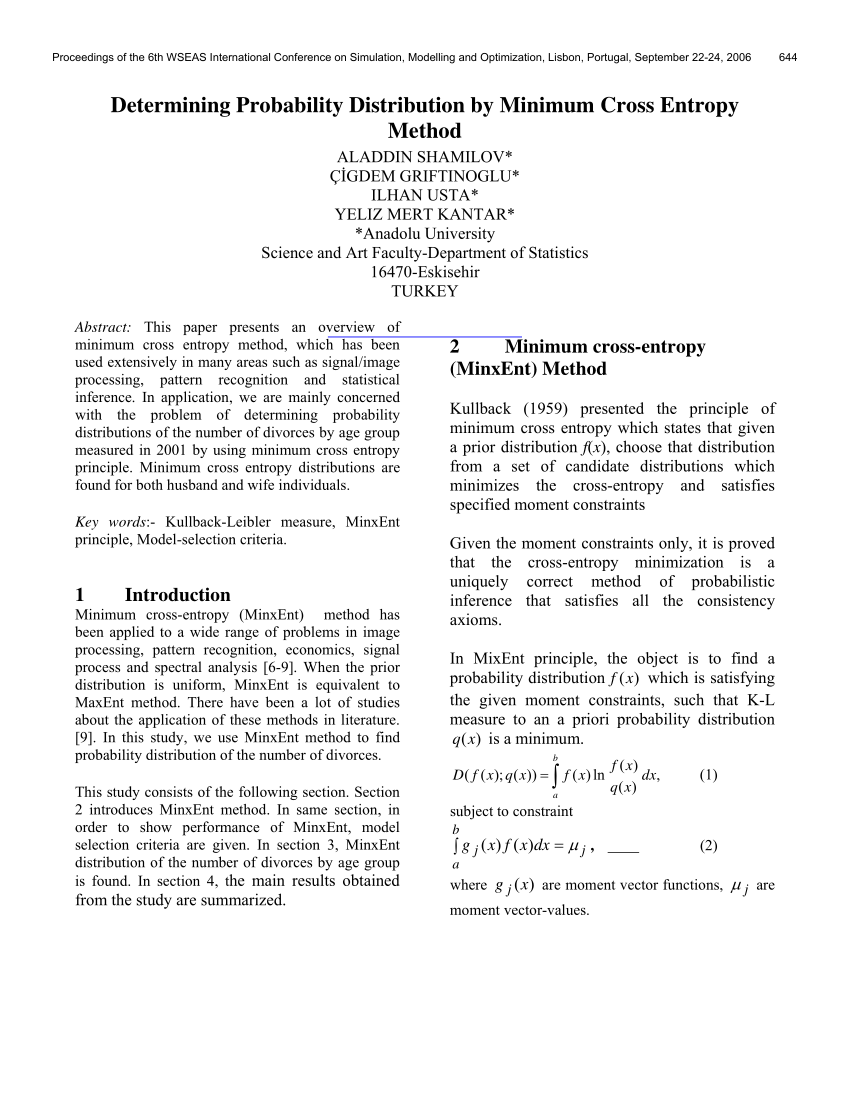

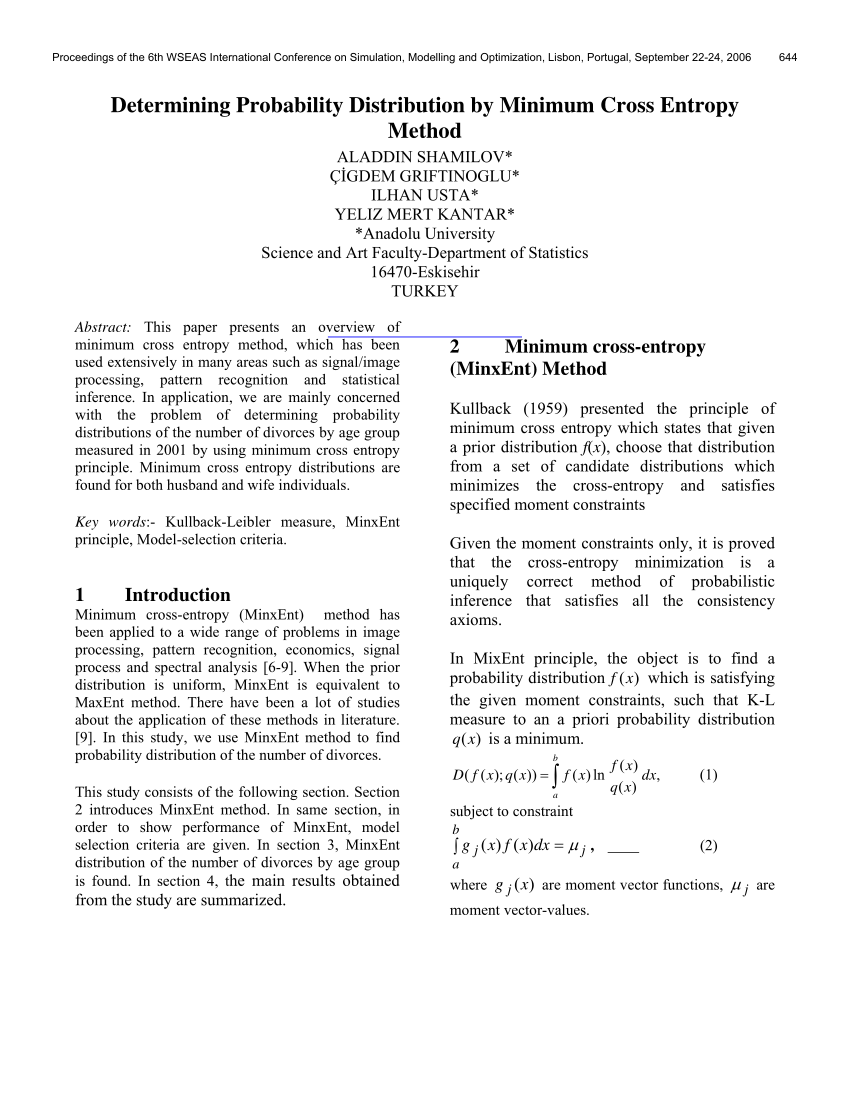

Pdf Determining Probability Distribution By Minimum Cross Entropy Method

Principle Of Maximum Entropy

Maximum Entropy Probability Distribution Wikipedia

Entropy Free Full Text A Kullback Leibler View Of Maximum Entropy And Maximum Log Probability Methods

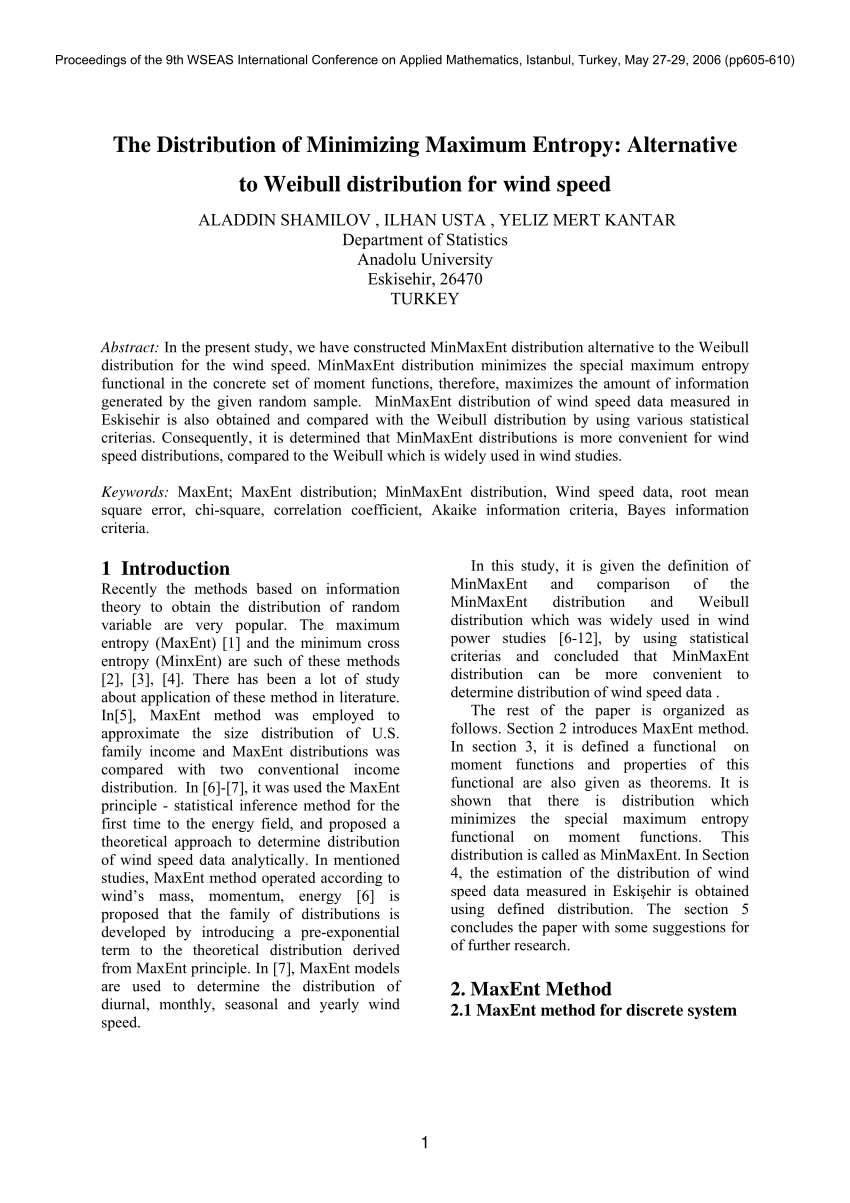

Pdf The Distribution Of Minimizing Maximum Entropy Alternative To Weibull Distribution For Wind Speed

2

Normal Distribution Demystified Understanding The Maximum Entropy By Naoki Towards Data Science

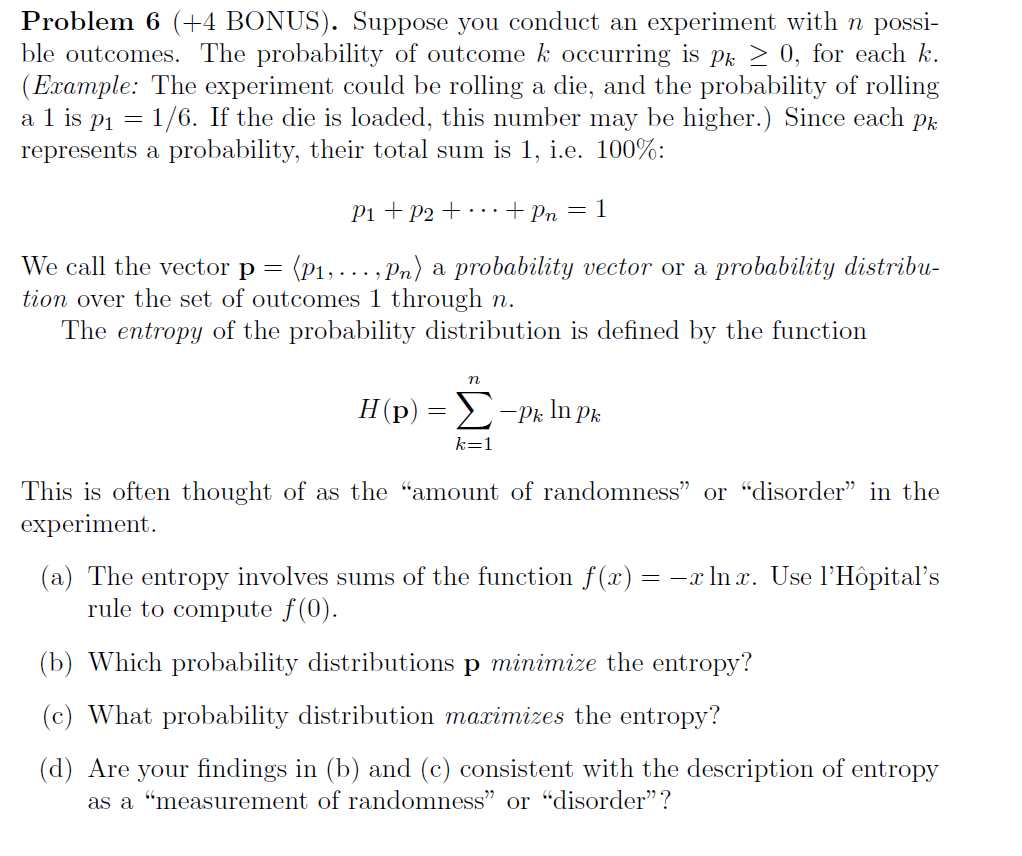

Solved Problem 6 4 Bonus Suppose You Conduct An Exper Chegg Com

A Gentle Introduction To Cross Entropy For Machine Learning

Principle Of Maximum Entropy Wikipedia

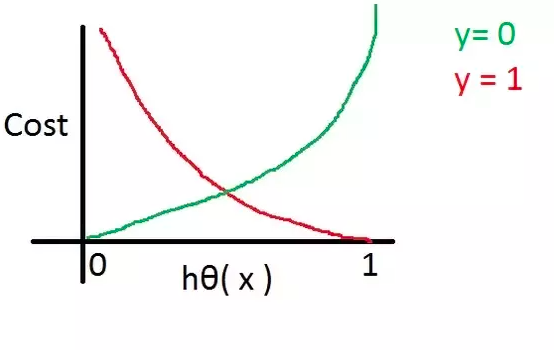

Detailed Description On Cross Entropy Loss Function

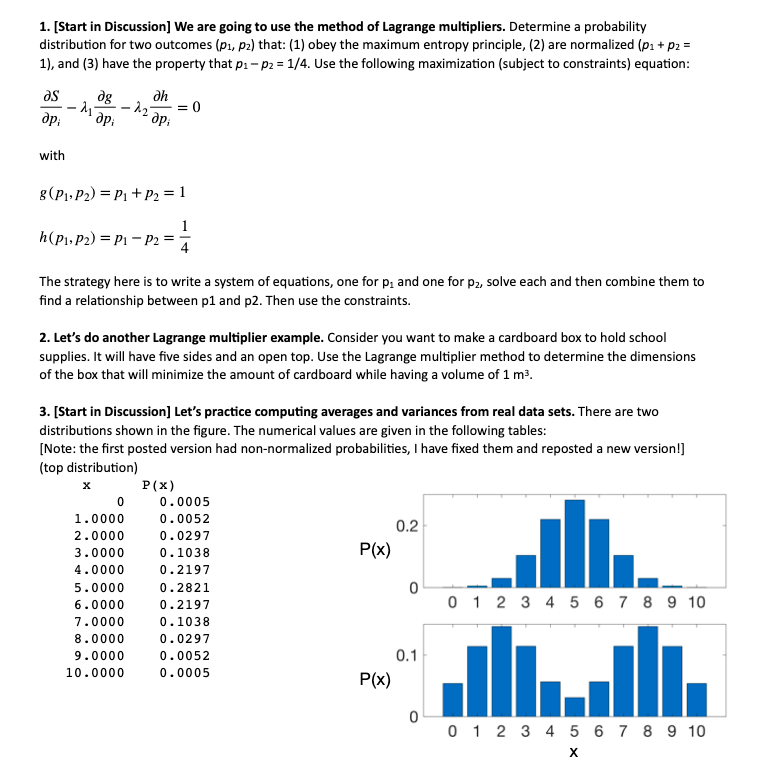

We Are Going To Use The Method Of Lagrange Multipl Chegg Com

Pdf Reasoning With Probabilities And Maximum Entropy The System Pit And Its Application In Lexmed

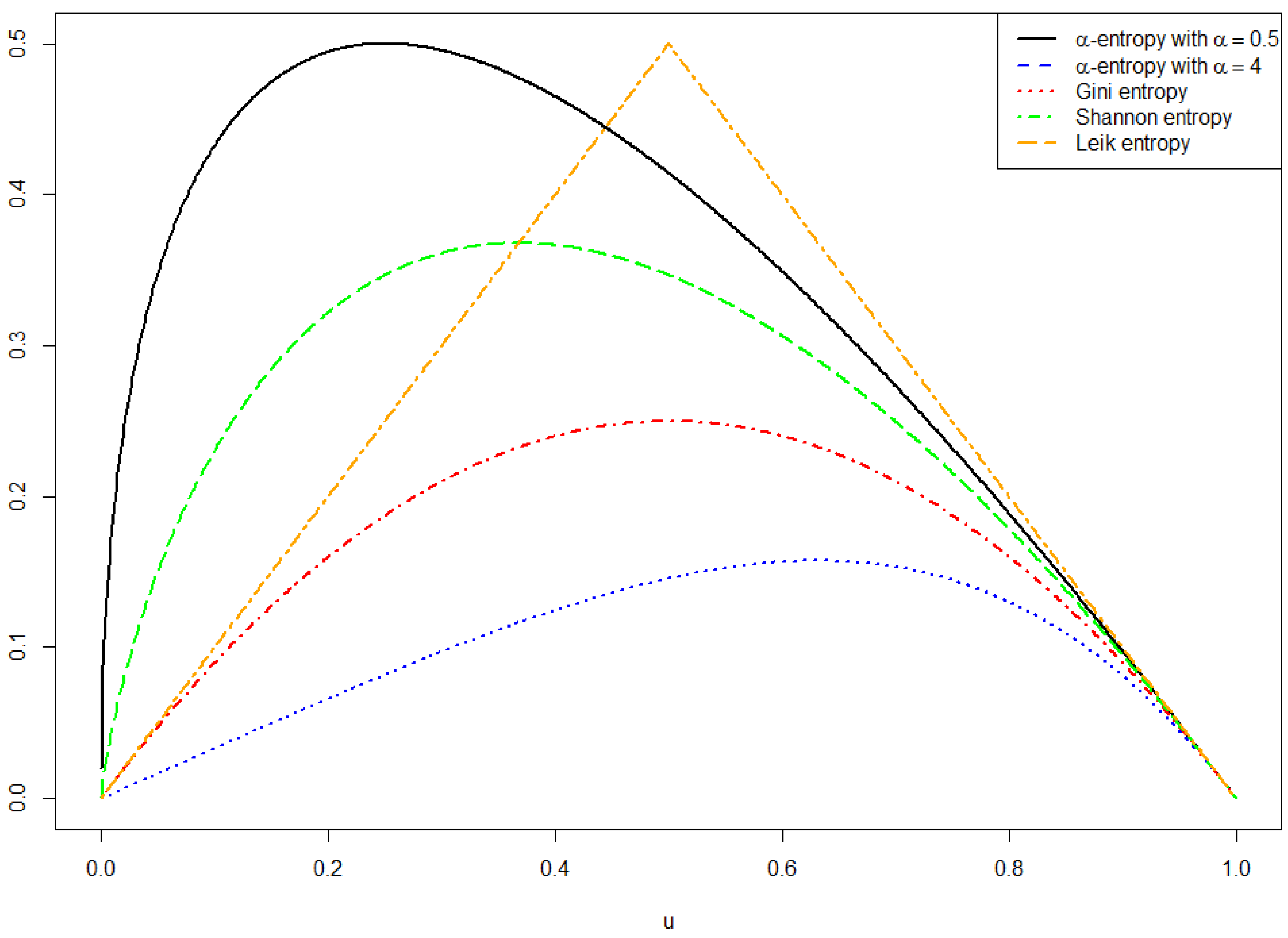

Entropy Free Full Text Cumulative Paired F Entropy Html

Normal Distribution Demystified Understanding The Maximum Entropy By Naoki Towards Data Science

Https Arxiv Org Pdf 1505 01066

3

Cross Entropy For Tensorflow Mustafa Murat Arat

Exercise 3 6 Cross Entropy Error Measure A More Chegg Com

Http Yaroslavvb Com Papers Abbas Entropy Pdf

Archived Post Review For Softmax And Loss Functions By Jae Duk Seo Medium

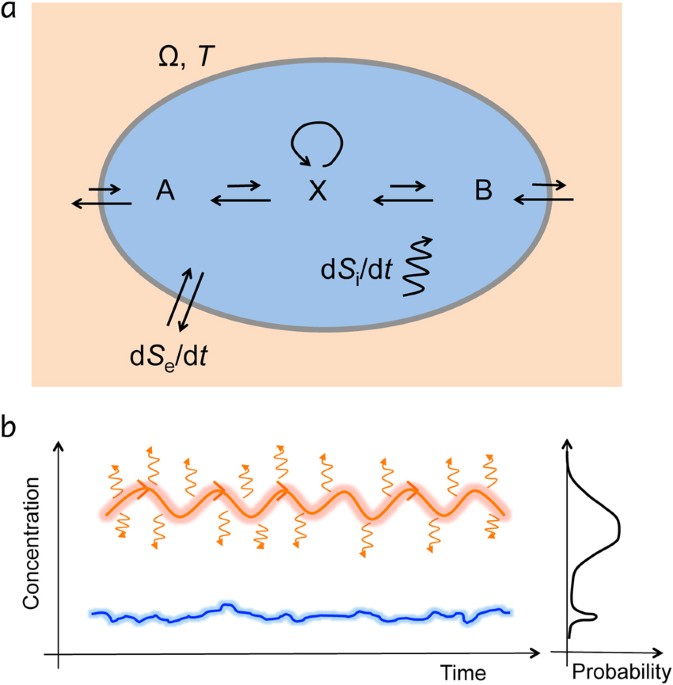

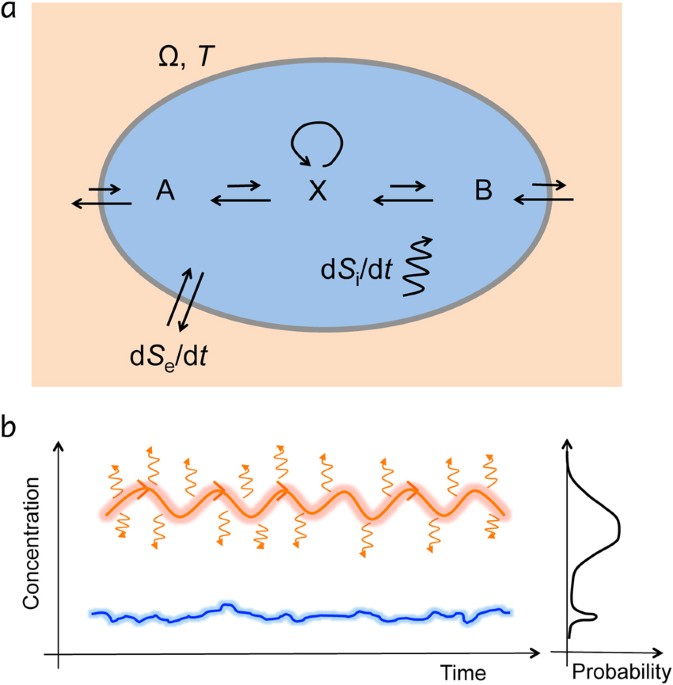

Entropy Production Selects Nonequilibrium States In Multistable Systems Scientific Reports

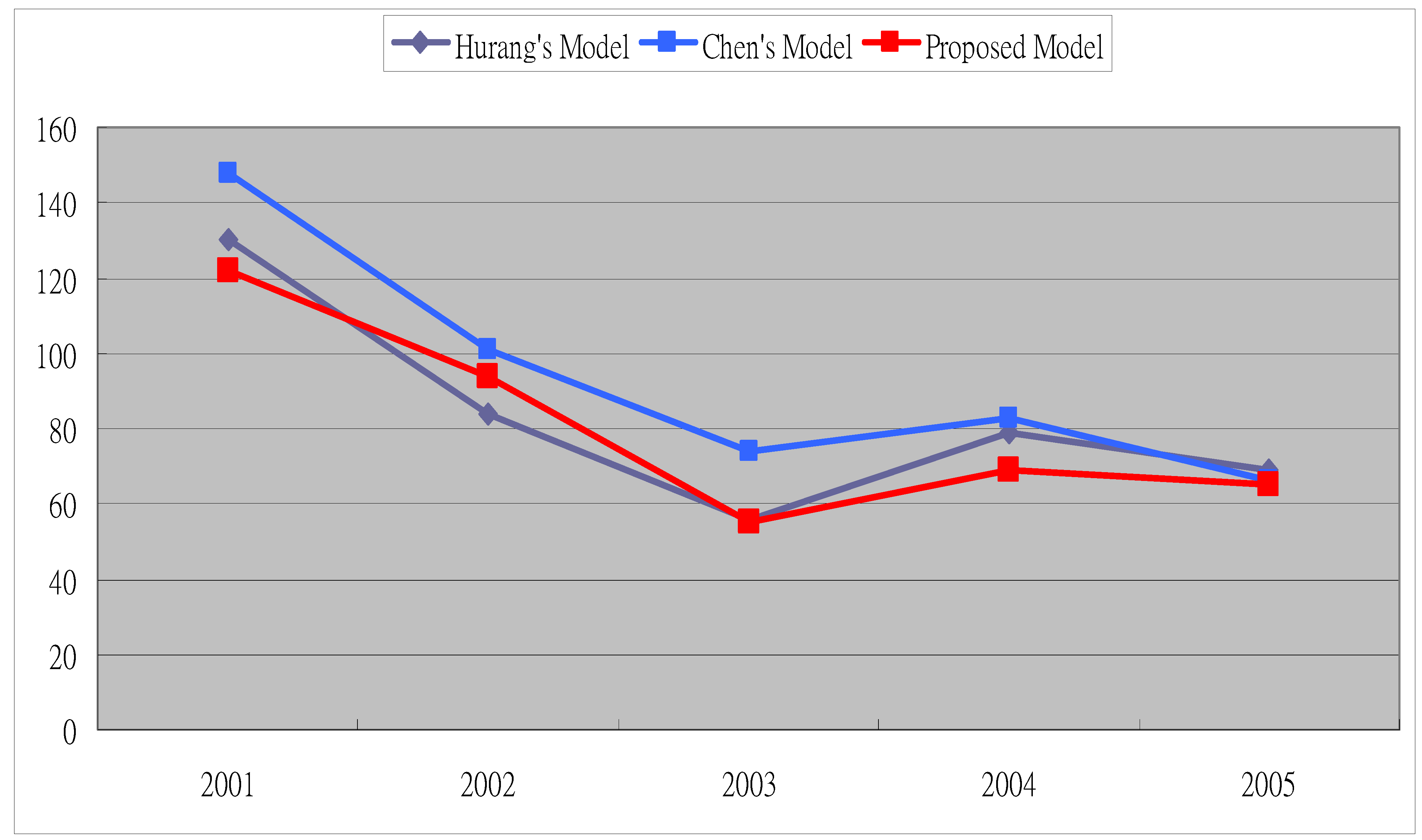

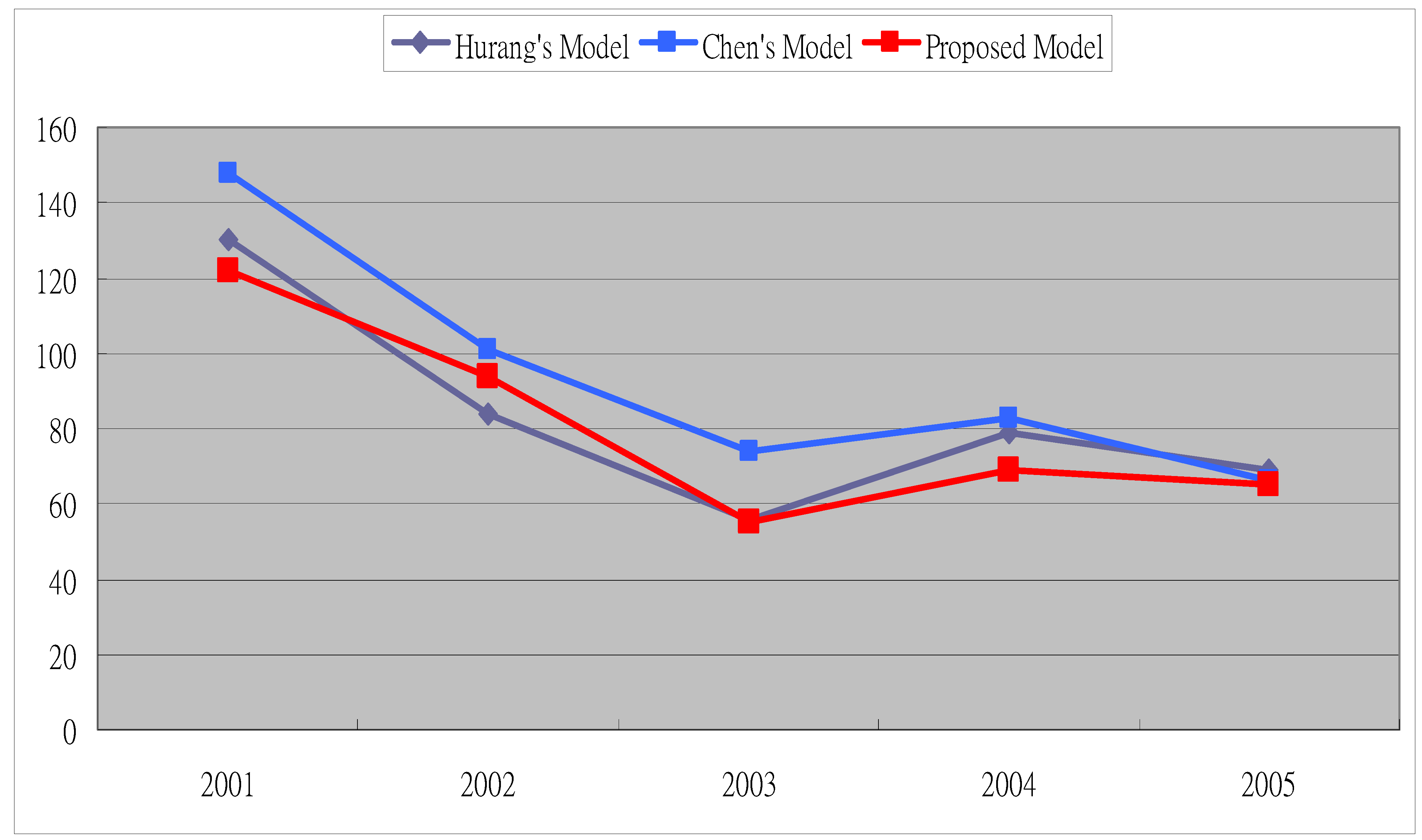

Entropy Free Full Text Forecasting The Stock Market With Linguistic Rules Generated From The Minimize Entropy Principle And The Cumulative Probability Distribution Approaches Html

How To Measure Energy Dependent Lags Without Bins In Energy Or Time Ppt Download

Https Apps Dtic Mil Dtic Tr Fulltext U2 A063120 Pdf

Maximal Entropy Random Walk Wikipedia

Mlfas 03 Neural Networks For Classification 03 Cross Entropy Loss Youtube

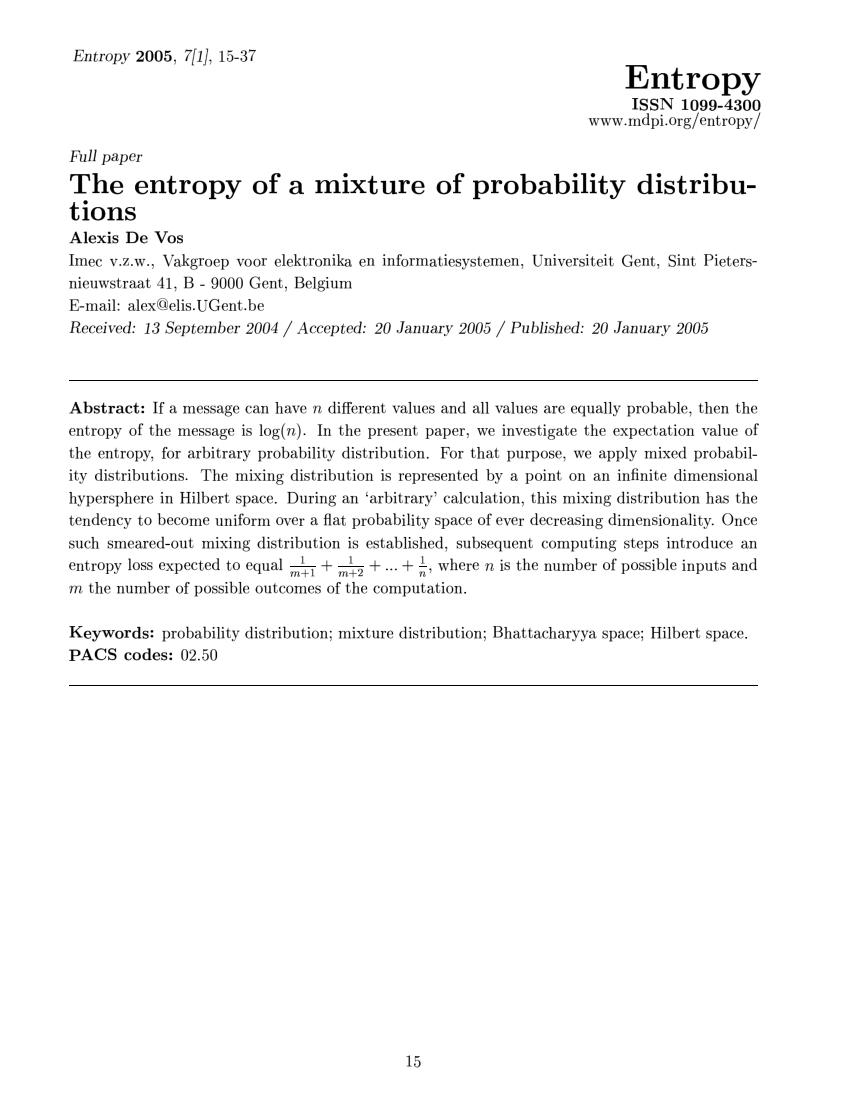

Pdf The Entropy Of A Mixture Of Probability Distributions

Normal Distribution Demystified Understanding The Maximum Entropy By Naoki Towards Data Science

Https Arxiv Org Pdf 1605 00441

The Maximum Entropy Fallacy Redux

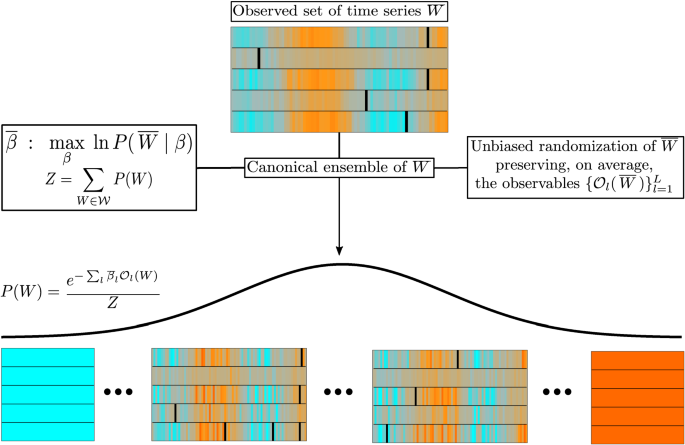

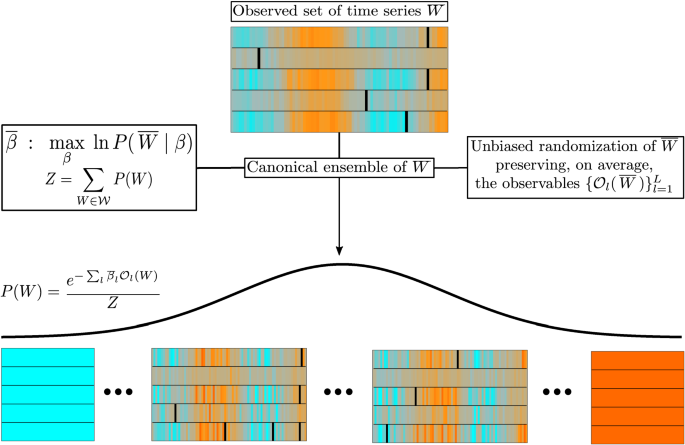

Maximum Entropy Approach To Multivariate Time Series Randomization Scientific Reports

Https Www Jstor Org Stable 4615900

Maximum Entropy An Overview Sciencedirect Topics

Integrating Molecular Simulation And Experimental Data A Bayesian Maximum Entropy Reweighting Approach Biorxiv

Forecasting The Stock Market With Linguistic Rules Generated From The Minimize Entropy Principle And The Cumulative Probability Distribution Approaches Topic Of Research Paper In Computer And Information Sciences Download Scholarly Article

Https Web Csulb Edu Tebert Teaching Lectures 528 Huffman Huffman Pdf

Entropy As A Variation Of Information For Testing The Goodness Of Fit Springerlink

Principle Of Maximum Entropy Definition Deepai

Kullback Leibler Kl Divergence

2

3

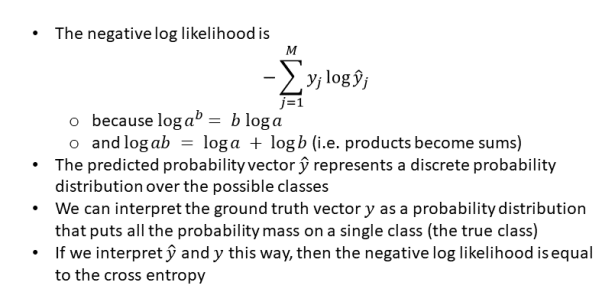

Connections Log Likelihood Cross Entropy Kl Divergence Logistic Regression And Neural Networks Glass Box

Kullback Leibler Divergence Wikipedia

Inequality In Latin America Ppt Download

Http Pillowlab Princeton Edu Teaching Statneuro2018 Slides Notes08 Infotheory Pdf

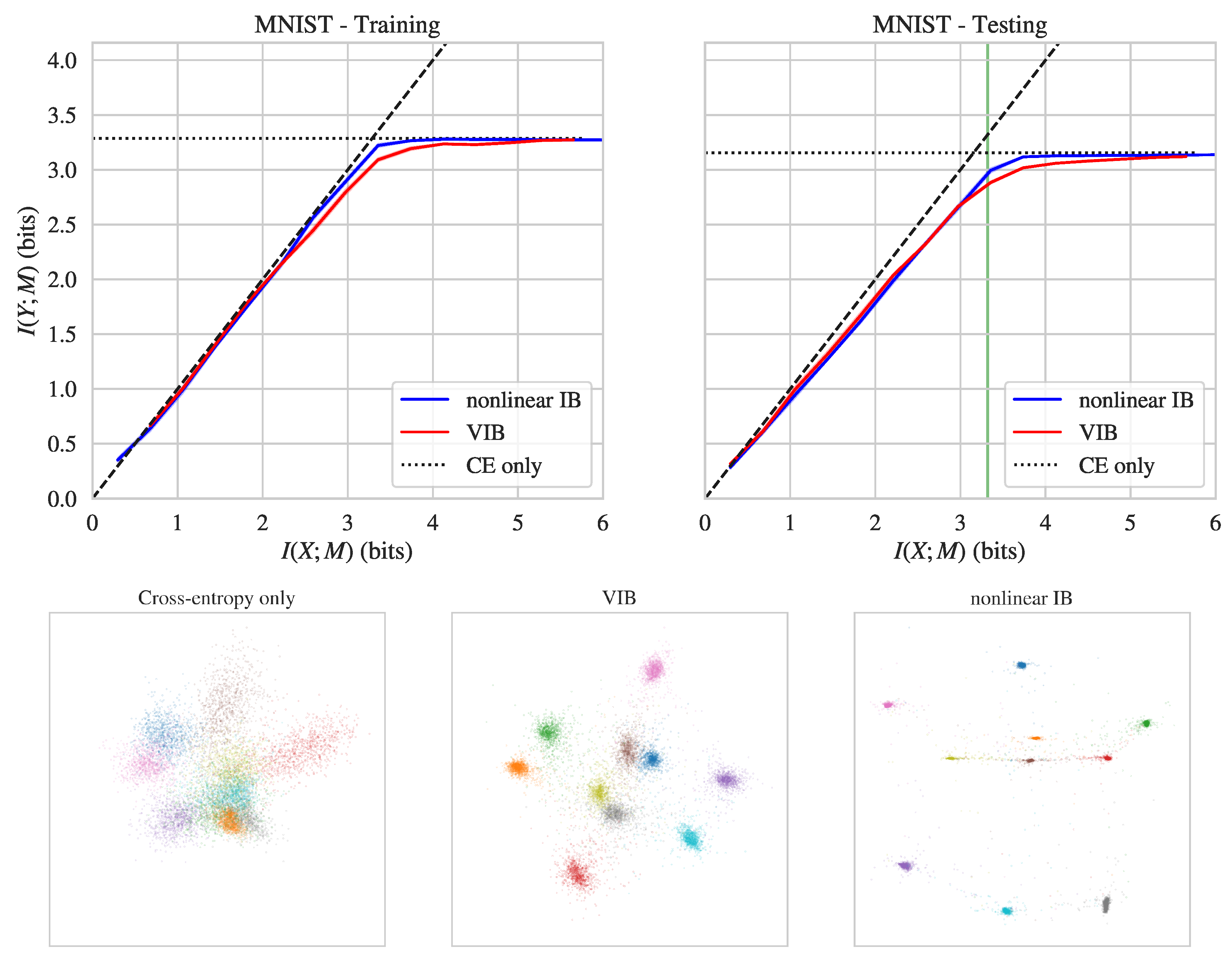

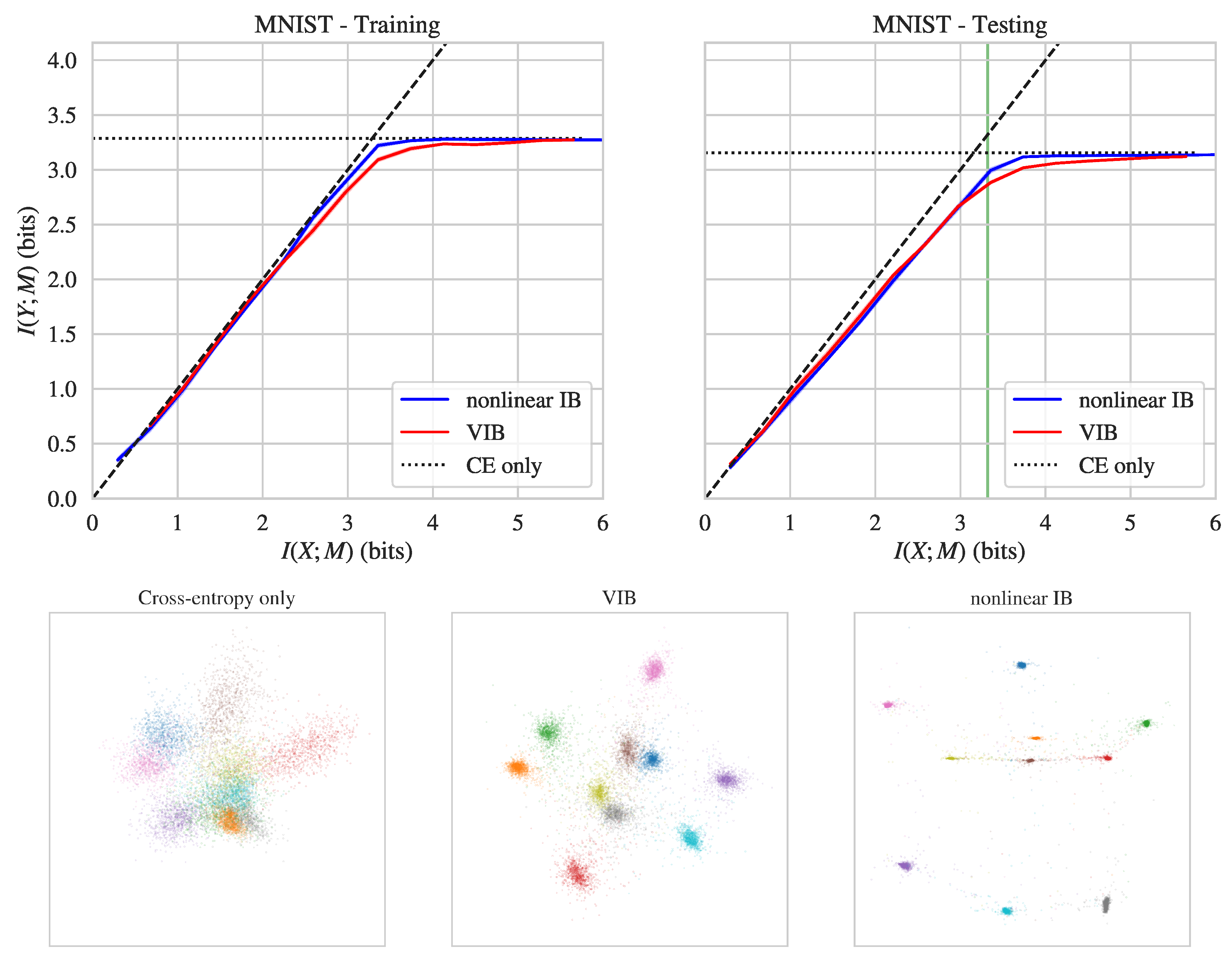

Entropy Free Full Text Nonlinear Information Bottleneck Html

Shannon Entropy An Overview Sciencedirect Topics

Lecture Notes Loss Is A Bad Thing Minimize It By A Ydobon Medium

Http Www Math Uic Edu Abramov Papers A4 Pdf

Applying Transfer Entropy To The Eeg Sapien Labs Neuroscience Human Brain Diversity Project

Computer Science Definition Of Entropy Baeldung On Computer Science

2

Http Papers Neurips Cc Paper 1733 Maximum Entropy Discrimination Pdf

Normal Distribution Demystified Understanding The Maximum Entropy By Naoki Towards Data Science

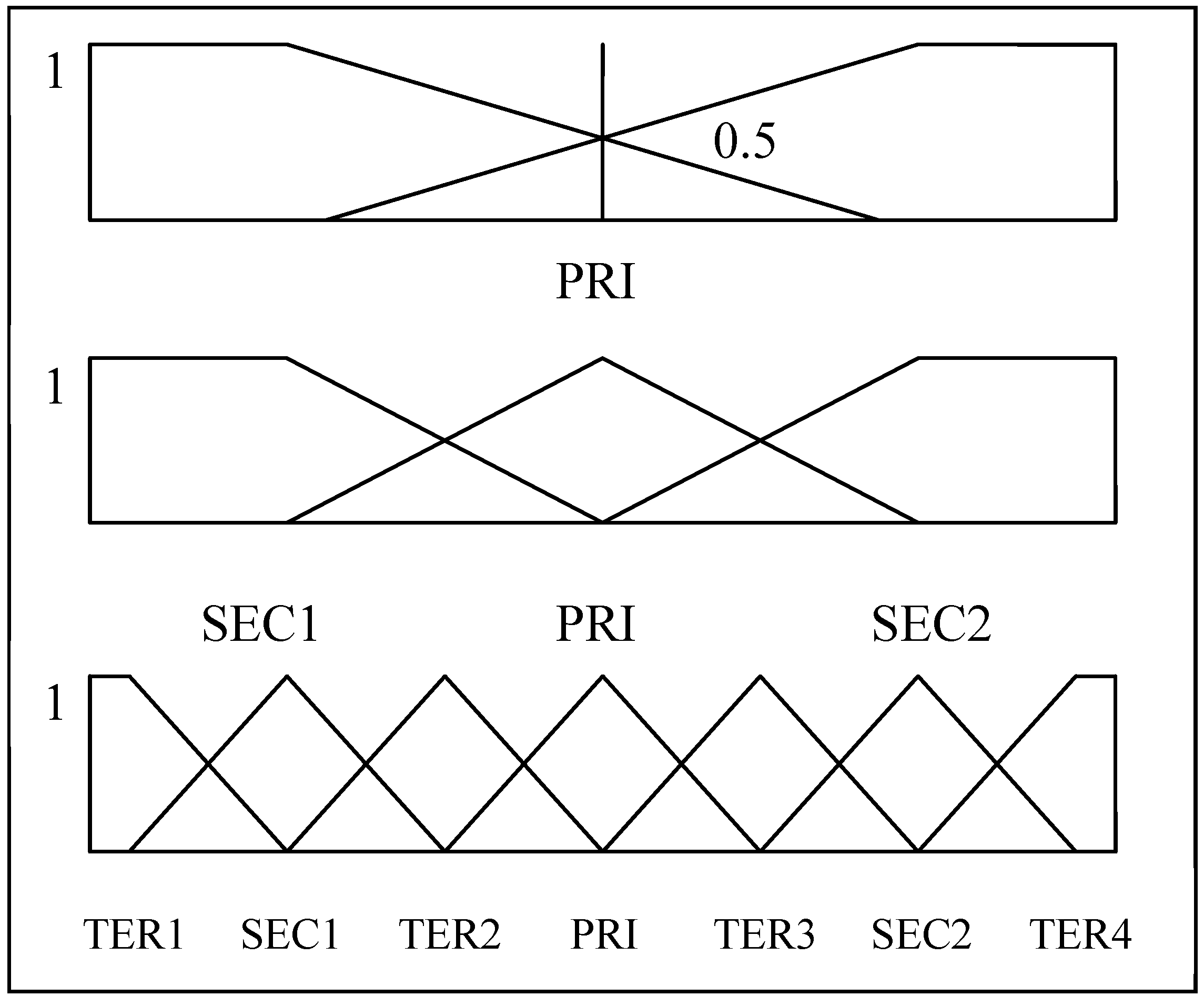

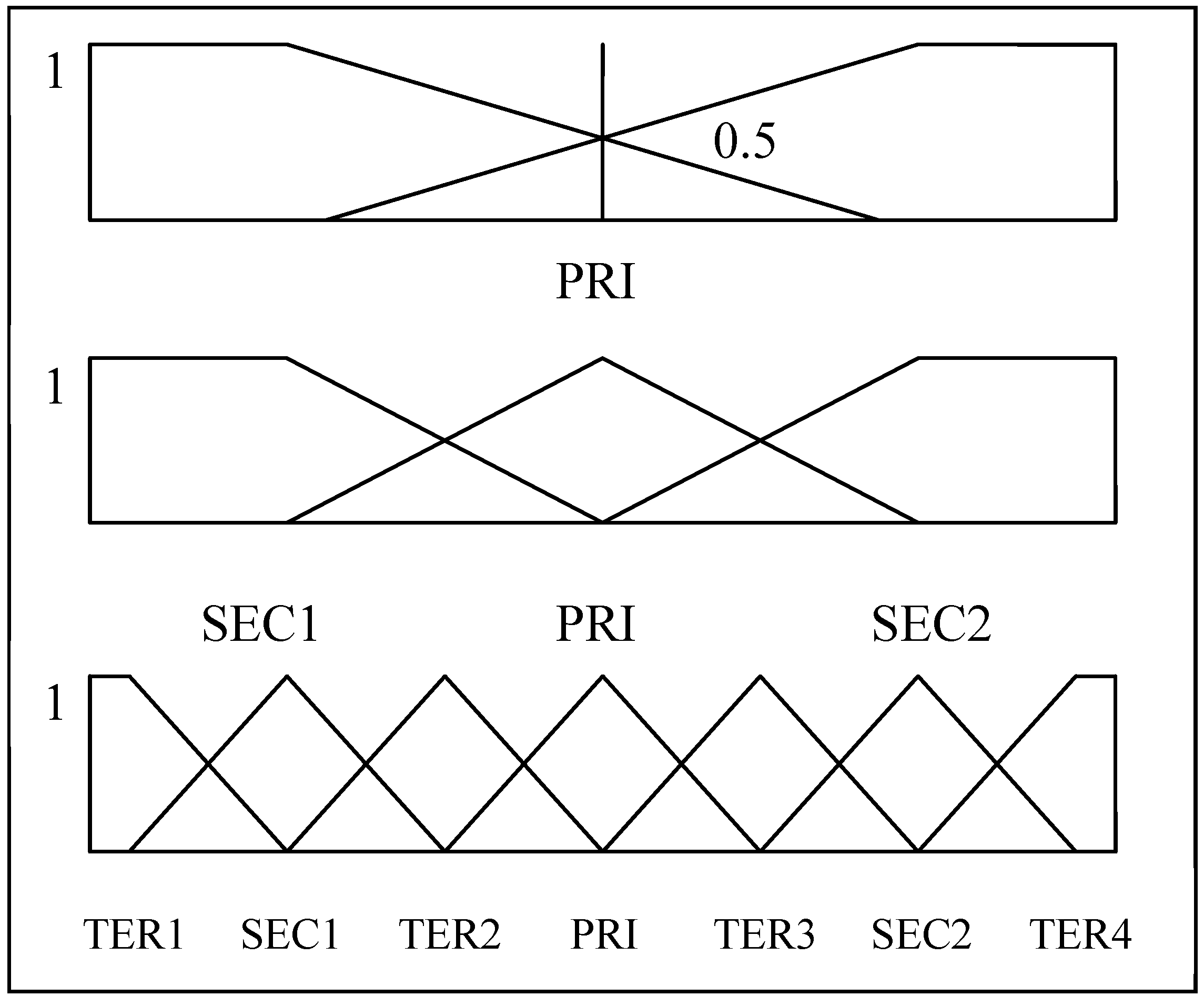

Partitioning Process Of Minimize Entropy Principle Approach Download Scientific Diagram

Https Eprint Iacr Org 2014 967 Pdf

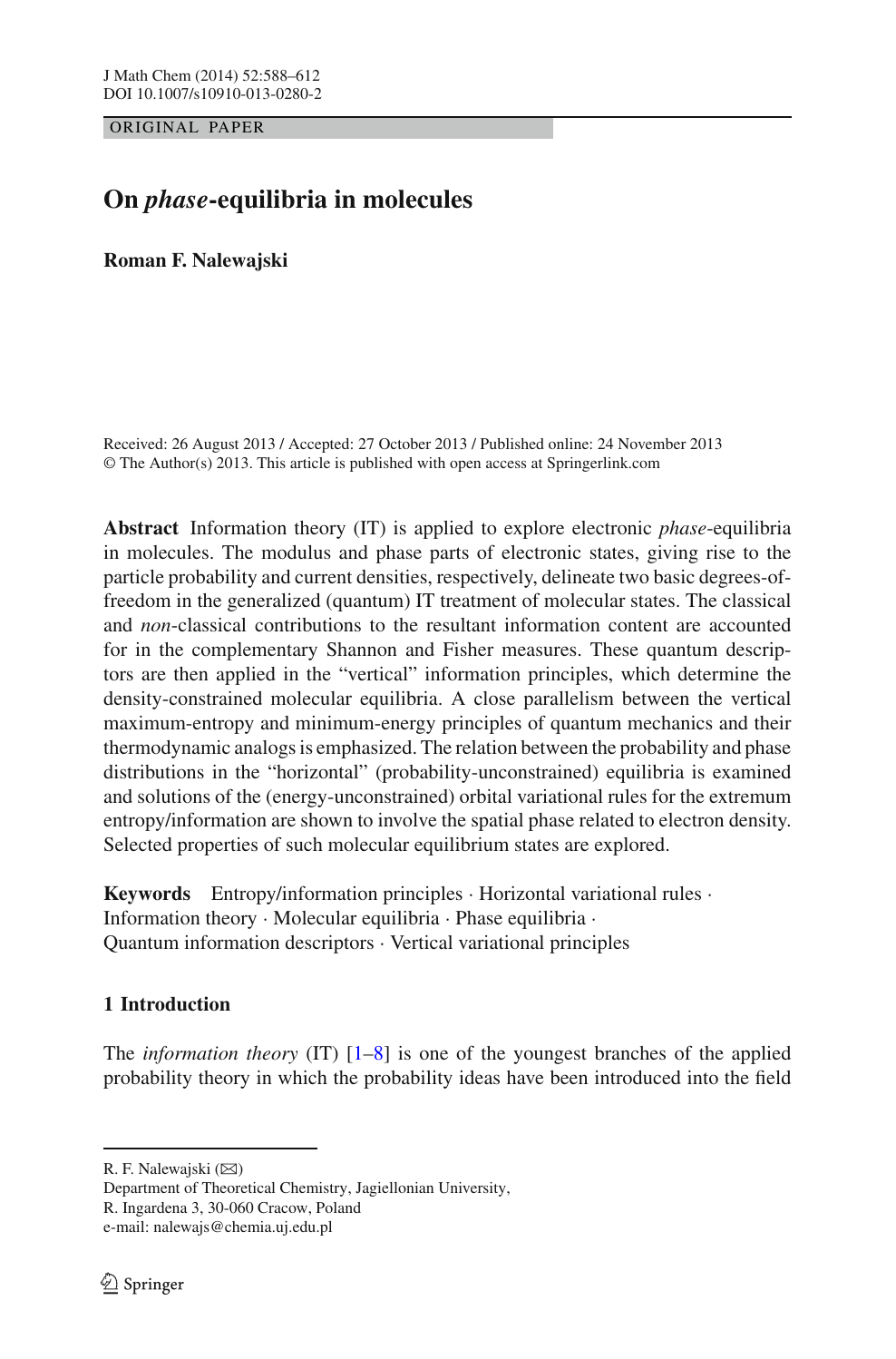

On Phase Equilibria In Molecules Topic Of Research Paper In Physical Sciences Download Scholarly Article Pdf And Read For Free On Cyberleninka Open Science Hub

Https Arxiv Org Pdf 1209 3744

Http Papers Neurips Cc Paper 1863 An Information Maximization Approach To Overcomplete And Recurrent Representations Pdf

Kullback Leibler Divergence Explained Count Bayesie

Https Www Jstor Org Stable 2331391

Combining Experiments And Simulations Using The Maximum Entropy Principle

Http Jmlr Org Papers Volume15 Archer14a Archer14a Pdf

Http Web Stanford Edu Danlass Courses Prob And Stats Winter15 Notes Infotheory Exercises Hints Solutions Pdf

Entropy As A Variation Of Information For Testing The Goodness Of Fit Springerlink

Https Encrypted Tbn0 Gstatic Com Images Q Tbn And9gctaztyly8 Om1fjrsdytkmwc6mypwqfpdgwl94sbgunskw Amlw Usqp Cau

Cross Entropy Loss Explained With Python Examples Data Analytics

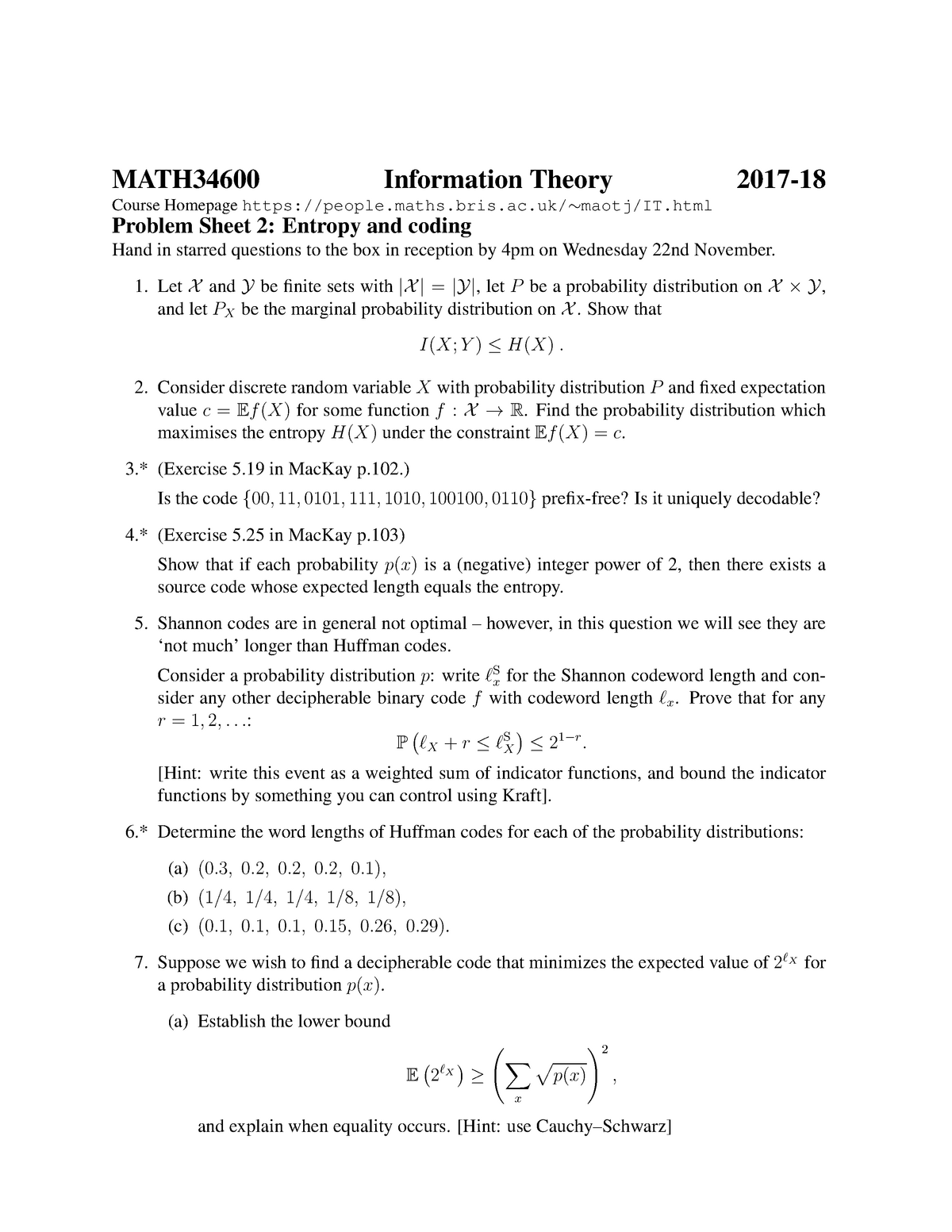

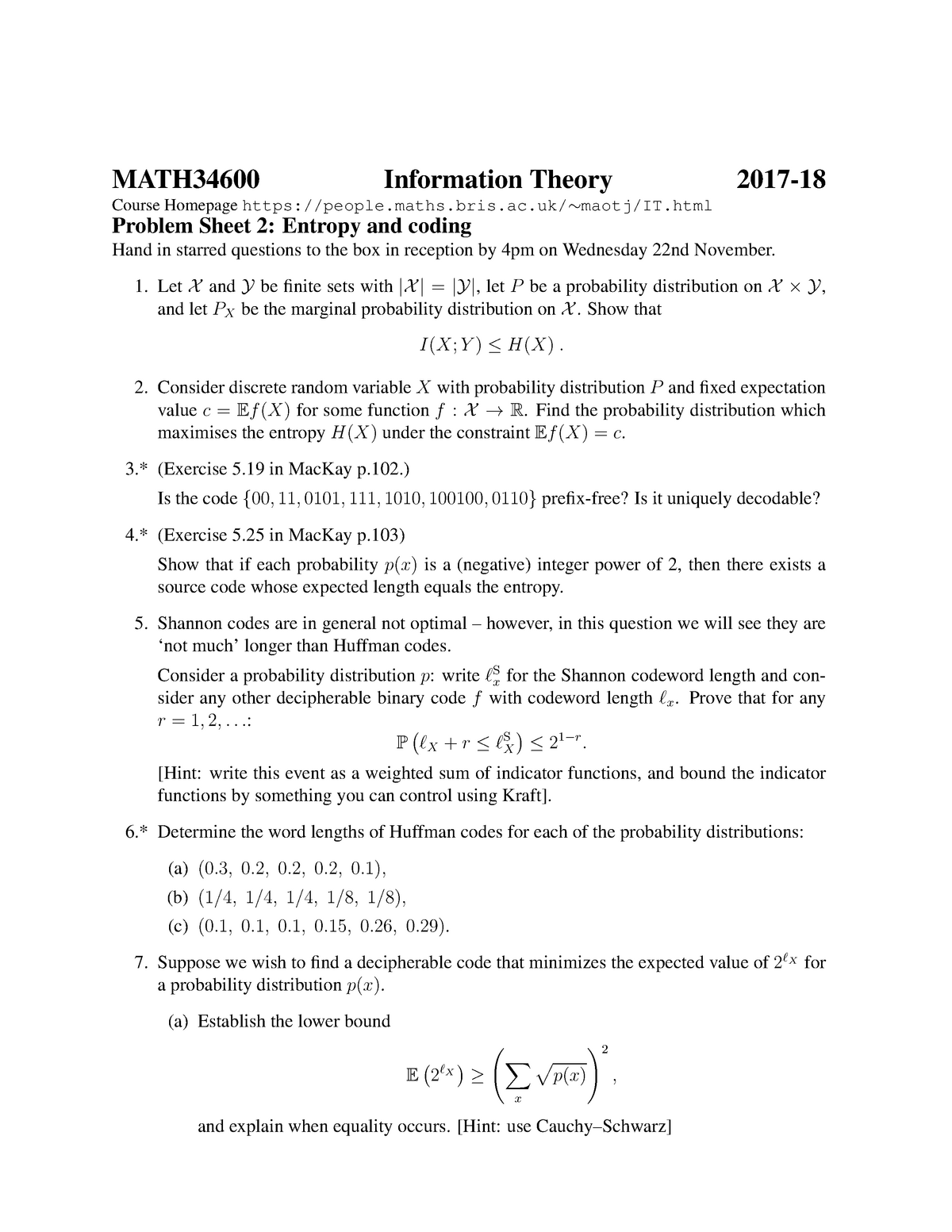

Math34600 2017 2018 Problem Sheet 2 Math34600 Information Theory 2017 18 Course Studocu

Header Stuff

Kullback Leibler Divergence Explained Synced

A Maximum Entropy Approach To The Loss Data Aggregation Problem

Testtest 1 Nis Preprintfeb2009 Page 224 225 Created With Publitas Com

Https Courses Csail Mit Edu 6 897 Spring03 Scribe Notes L13 Lecture13 Pdf

The Mystery Of Entropy Measuring Unpredictability In Machine Learning

Understanding Entropy Cross Entropy And Cross Entropy Loss By Vijendra Singh Medium

Http Fuchsbraun Homepage T Online De Media A9b092116cdf97adffff809effffffef Pdf

Kullback Leibler Divergence Wikipedia

Renyi Entropy And Cauchy Schwartz Mutual Information Applied To Mifs U Variable Selection Algorithm A Comparative Study

Principle Of Maximum Entropy Definition Deepai

Shannon Entropy Of A Fair Dice Mathematics Stack Exchange

Giacomo Torlai On Twitter Noisy Circuits Are Simulated By Specifying A Set Of Local Kraus Operators The Output Density Operator Is Approximated As An Mpo A Complete Description Of The Quantum Channel

What Is Cross Entropy Baeldung On Computer Science

Tmdp97 40 Ifpri Publications Ifpri Knowledge Collections

Pdf Application Of Non Probabilistic Information Arcangelo Pellegrino Academia Edu

Http Www Math Uic Edu Abramov Papers A3 Pdf

Entropy Free Full Text Forecasting The Stock Market With Linguistic Rules Generated From The Minimize Entropy Principle And The Cumulative Probability Distribution Approaches Html

Https People Csail Mit Edu Dsontag Papers Welleretal Uai14 Pdf

Https Apps Dtic Mil Dtic Tr Fulltext U2 A063120 Pdf

Normal Distribution Demystified Understanding The Maximum Entropy By Naoki Towards Data Science

Cross Entropy Explained What Is Cross Entropy For Dummies

Https Encrypted Tbn0 Gstatic Com Images Q Tbn And9gcrkbcthrgeyirrh01qyoise6tk0ok9frsql Gd Uitqrp7gb6jh Usqp Cau

Https Www Tse Fr Eu Sites Default Files Medias Stories Semin 11 12 Statistics Bessa1 Pdf

Forecasting The Stock Market With Linguistic Rules Generated From The Minimize Entropy Principle And The Cumulative Probability Distribution Approaches Topic Of Research Paper In Computer And Information Sciences Download Scholarly Article

Ppt Probability In Decline Powerpoint Presentation Free Download Id 1420066

Https Opus4 Kobv De Opus4 Fau Files 13317 Entropy 22 00091 V2 Pdf

Post a Comment for "Which Probability Distribution Minimizes Entropy"